Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Confluent + WarpStream = Large-Scale Streaming in your Cloud

I’m excited to announce that Confluent has acquired WarpStream, an innovative Kafka-compatible streaming solution with a unique architecture. We’re excited to be adding their product to our portfolio alongside Confluent Platform and Confluent Cloud to serve customers who want a cloud-native streaming offering in their own cloud account.

Why add another flavor of streaming? After all, we’ve long offered two major form factors–Confluent Cloud, a fully managed serverless offering, and Confluent Platform, a self-managed software offering–why complicate things? Well, our goal is to make data streaming the central nervous system of every company, and to do that we need to make it something that is a great fit for a vast array of use cases and companies.

The WarpStream product has a number of great features, including offset-preserving replication and Cluster Quotas (coming soon!) and direct-to-S3 writes like Confluent’s Freight clusters. However, the big thing they did that got our attention was their next-generation approach to BYOC architectures. What do I mean by that? Well, we’ve long felt there was a spectrum that looked something like this:

Cloud data services solve a lot of problems—you get serverless elastic expansion, fully managed operations, and seamless upgrades and enhancements—but they also require more trust in the vendor (both for security and operations) and require you to sacrifice some control and flexibility. A self managed offering gives you that flexibility, but requires a lot of work to fully operationalize. For a long time, our feeling was that these two choices were enough. And indeed, that the best positions on this spectrum were the far left (full SaaS cloud offerings) or the far right (plain vanilla software offerings). Customers would sometimes ask for something in the middle, a cloud offering but in their cloud. This sounded good, but when we looked at products that worked this way they were often the worst of both worlds: self-managed data systems that had been forklifted into the cloud with semi-managed models that left responsibility for security and uptime pretty vague. This was often the worst of both worlds. They were meant to be secure because the data didn’t leave the customer's account, but to install them you had to grant the vendor full privileges in your account which meant they had access to not just your data but your infrastructure. And in doing this they blurred the responsibility for operations between the vendor and customer, with neither really having sufficient control to diagnose and fix a problem. In addition, these BYOC solutions were built using highly stateful systems that could not take advantage of the primary benefit of running software in the cloud: elasticity. As a result, they always had to be highly over-provisioned for peak load. In practice, this didn’t seem that compelling.

What WarpStream has shown, though, is how this can be done right. How a system can be built for BYOC that represents a compelling point on this spectrum, giving a very nice tradeoff between ease of use and control. They did this by designing specifically for this architecture from the ground up. Their BYOC-native approach offers a number of benefits of a cloud offering while still maintaining strong boundaries for security and operations. This isn’t a panacea—you give up the serverless model of full SaaS—but there are definitely a set of use cases and customers where this is the perfect fit.

This unique architectural approach is what got us interested and showed us how it could fit into Confluent. However, it’s far from the only compelling thing about the product and team. From the first time I met Richie and Ryan, the WarpStream founders, I got a strong sense of a team on a mission. They were passionate, opinionated, and impatient, all in the very best way. This personality shows through in their work: WarpStream has moved fast with a fantastic succession of features and innovations, each communicated in a thoughtful, pointed blog post on why that was the exact right thing to build. This combination of tasteful design and quality engineering built an early cohort of passionate customers, and I’m incredibly excited to keep leading this effort and expanding that set of customers as part of Confluent.

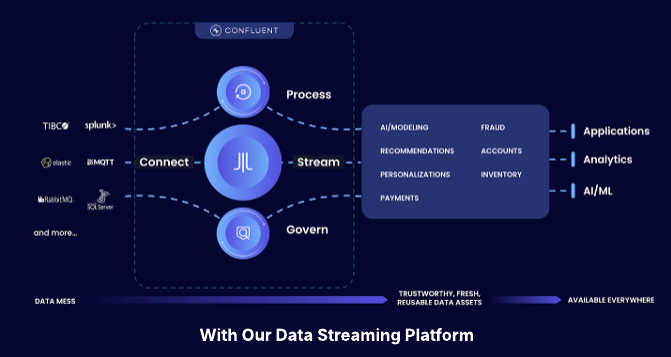

We plan to let this product develop in its own way and the WarpStream team has exciting ideas of where they want to take things. We expect there will be some ability to share some technology between our offerings—especially our connectors, stream processing, and governance efforts—but as we’ve done with Confluent Platform and Confluent Cloud we think it is more important to design a great product for the architecture rather than try to maximize code reuse. Today the WarpStream product is still a young startup product, so it won’t immediately be a fit for all customers, but we plan to invest in security and hardening over time to bring it up to the same enterprise-grade standards as Confluent Platform and Confluent Cloud, as well as integrate it into our systems for ease of signup, billing, and account management.

Where is WarpStream the best fit today? I think it is a great solution for a set of large-scale use cases that involve huge observability streams that immediately benefit from WarpStream’s S3-native architecture and flexible deployment model. But our plan over time is to follow the technology and see where customers take it, as we have with our other product offerings.

Welcome to the WarpStream team and customers, we couldn’t be more excited to work with you!

이 블로그 게시물이 마음에 드셨나요? 지금 공유해 주세요.

Confluent 블로그 구독

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.

IBM to Acquire Confluent

We are excited to announce that Confluent has entered into a definitive agreement to be acquired by IBM.