Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Author: Adam Bellemare

Shift Left: Headless Data Architecture, Part 2

Building a headless data architecture requires us to identify the work we’re already doing deep inside our data analytics plane, and shift it to the left. Learn the specifics in this blog.

Shift Left: Headless Data Architecture, Part 1

A headless data architecture means no longer having to coordinate multiple copies of data, and being free to use whatever processing or query engine is most suitable for the job. This blog details how it works.

Shift Left: Bad Data in Event Streams, Part 2

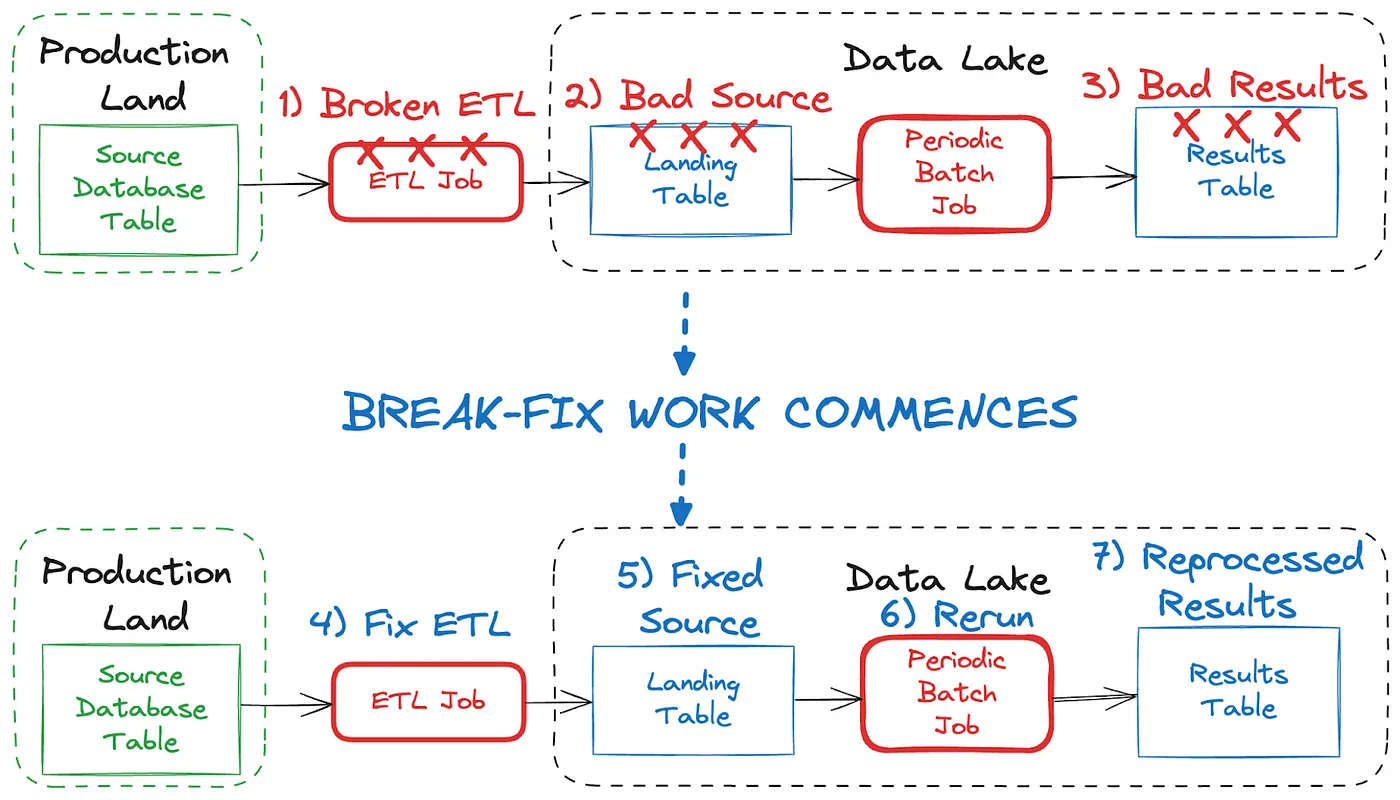

Event design plays a big role in your ability to fix bad data in your streams. But if you’ve wrecked a stream with bad data (i.e., it’s unavoidably contaminated), you'll need to employ a "rewind, rebuild, and retry" strategy.

Shift Left: Bad Data in Event Streams, Part 1

At a high level, bad data is data that doesn’t conform to what is expected, and it can cause serious issues and outages for all downstream data users. This blog looks at how bad data may come to be, and how we can deal with it when it comes to event streams.

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Whatever Happened to Big Data?

The big data revolution of the early 2000s saw rapid growth in data creation, storage, and processing. A new set of architectures, tools, and technologies emerged to meet the demand. But what of big data today? You seldom hear of it anymore. Where has it gone?

The Definitive Guide to Building a Data Mesh with Event Streams

Data mesh. This oft-talked-about architecture has no shortage of blog posts, conference talks, podcasts, and discussions. One thing that you may have found lacking is a concrete guide on precisely […]

Crossing the Streams: The New Streaming Foreign-Key Join Feature in Kafka Streams

Companies adopt streaming data and Apache Kafka® because it provides them with real-time information about their business and customers. In practice, the challenge is that this information is spread across […]