Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

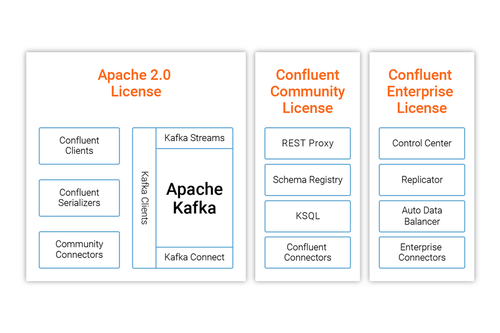

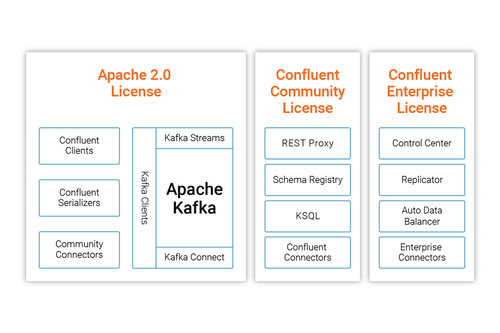

A Developer’s Guide to the Confluent Community License

Last week we announced that we changed the license of some of the components of Confluent Platform from Apache 2.0 to the Confluent Community License. We are really grateful to […]

12 Days of Tech Tips

For the first 12 days of December, Confluent shared a daily tech tip related to managing Apache Kafka® in the cloud. These tips make it easier for you to get […]

License Changes for Confluent Platform

We’re changing the license for some of the components of Confluent Platform from Apache 2.0 to the Confluent Community License. This new license allows you to freely download, modify, and […]

Deep Dive into ksqlDB Deployment Options

The phrase time value of data has been used to demonstrate that the value of captured data diminishes by time. This means that the sooner the data is captured, analyzed […]

Kafka Streams and KSQL with Minimum Privileges

The principle of least privilege dictates that each user and application will have the minimal privileges required to do their job. When applied to Apache Kafka® and its Streams API, […]

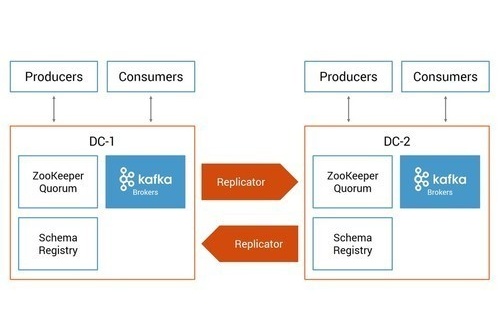

3 Ways to Prepare for Disaster Recovery in Multi-Datacenter Apache Kafka Deployments

Imagine: Disaster strikes—catastrophic hardware failure, software failure, power outage, denial of service attack or some other event causes one datacenter with an Apache Kafka® cluster to completely fail. Yet Kafka […]

Streaming in the Clouds: Where to Start

Only a few years ago, when someone said they had a “cloud-first strategy,” you knew exactly who their new preferred vendor was. These days, however, the story is a lot […]

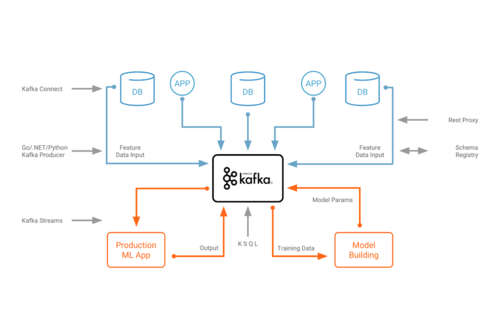

Using Apache Kafka to Drive Cutting-Edge Machine Learning

Machine learning and the Apache Kafka® ecosystem are a great combination for training and deploying analytic models at scale. I had previously discussed potential use cases and architectures for machine […]

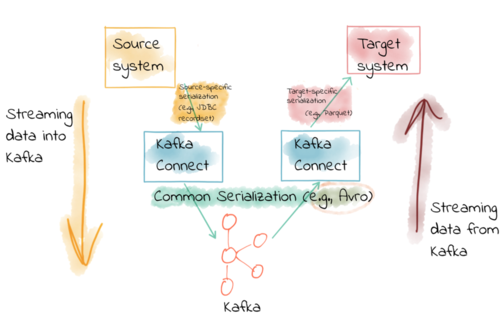

Kafka Connect Deep Dive – Converters and Serialization Explained

Kafka Connect is part of Apache Kafka®, providing streaming integration between data stores and Kafka. For data engineers, it just requires JSON configuration files to use. There are connectors for […]

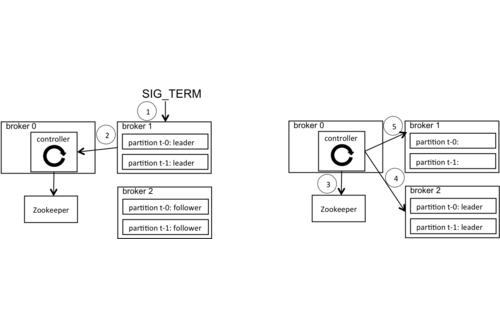

Apache Kafka Supports 200K Partitions Per Cluster

In Kafka, a topic can have multiple partitions to which records are distributed. Partitions are the unit of parallelism. In general, more partitions leads to higher throughput. However, there are […]

How to Work with Apache Kafka in Your Spring Boot Application

Choosing the right messaging system during your architectural planning is always a challenge, yet one of the most important considerations to nail. As a developer, I write applications daily that […]

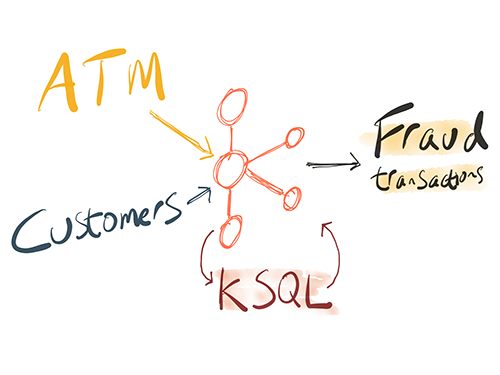

ATM Fraud Detection with Apache Kafka and KSQL

Fraud detection is a topic applicable to many industries, including banking and financial sectors, insurance, government agencies and law enforcement and more. Fraud attempts have seen a drastic increase in […]

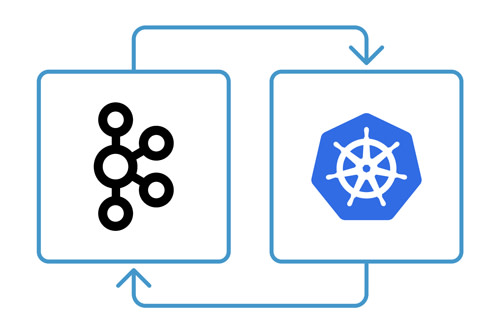

Apache Kafka on Kubernetes – Could You? Should You?

When Confluent launched the Helm Charts and early access program for Confluent Operator, we published a blog post explaining how to easily run Apache Kafka® on Kubernetes. Since then, we’ve […]

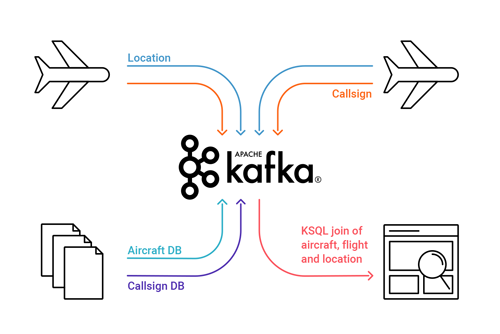

Noise Mapping with KSQL, a Raspberry Pi and a Software-Defined Radio

Our new cat, Snowy, is waking early. She is startled by the noise of jets flying over our house. Can I determine which plane is upsetting her by utilizing Apache […]