Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Internet of Things (IoT) and Event Streaming at Scale with Apache Kafka and MQTT

The Internet of Things (IoT) is getting more and more traction as valuable use cases come to light. A key challenge, however, is integrating devices and machines to process the […]

Kafka Summit San Francisco 2019 Session Videos

Last week, the Kafka Summit hosted nearly 2,000 people from 40 different countries and 595 companies—the largest Summit yet. By the numbers, we got to enjoy four keynote speakers, 56 […]

Building a Real-Time, Event-Driven Stock Platform at Euronext

As the head of global customer marketing at Confluent, I tell people I have the best job. As we provide a complete event streaming platform that is radically changing how […]

How to Run Apache Kafka with Spring Boot on Pivotal Application Service (PAS)

This tutorial describes how to set up a sample Spring Boot application in Pivotal Application Service (PAS), which consumes and produces events to an Apache Kafka® cluster running in Pivotal […]

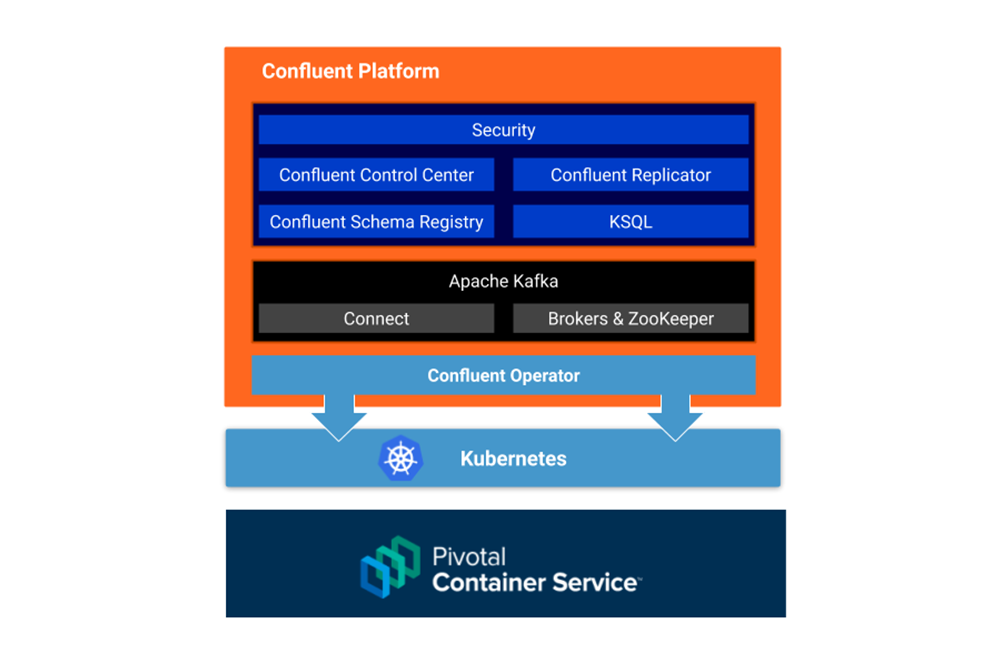

How to Deploy Confluent Platform on Pivotal Container Service (PKS) with Confluent Operator

This tutorial describes how to set up an Apache Kafka® cluster on Enterprise Pivotal Container Service (Enterprise PKS) using Confluent Operator, which allows you to deploy and run Confluent Platform […]

Why Scrapinghub’s AutoExtract Chose Confluent Cloud for Their Apache Kafka Needs

We recently launched a new artificial intelligence (AI) data extraction API called Scrapinghub AutoExtract, which turns article and product pages into structured data. At Scrapinghub, we specialize in web data […]

Kafka Summit San Francisco 2019: Day 2 Recap

If you looked at the Kafka Summits I’ve been a part of as a sequence of immutable events (and they are, unless you know something about time I don’t), it […]

Kafka Summit San Francisco 2019: Day 1 Recap

Day 1 of the event, summarized for your convenience. They say you never forget your first Kafka Summit. Mine was in New York City in 2017, and it had, what, […]

Free Apache Kafka as a Service with Confluent Cloud

Go from zero to production on Apache Kafka® without talking to sales reps or building infrastructure Apache Kafka is the standard for event-driven applications. But it’s not without its challenges, […]

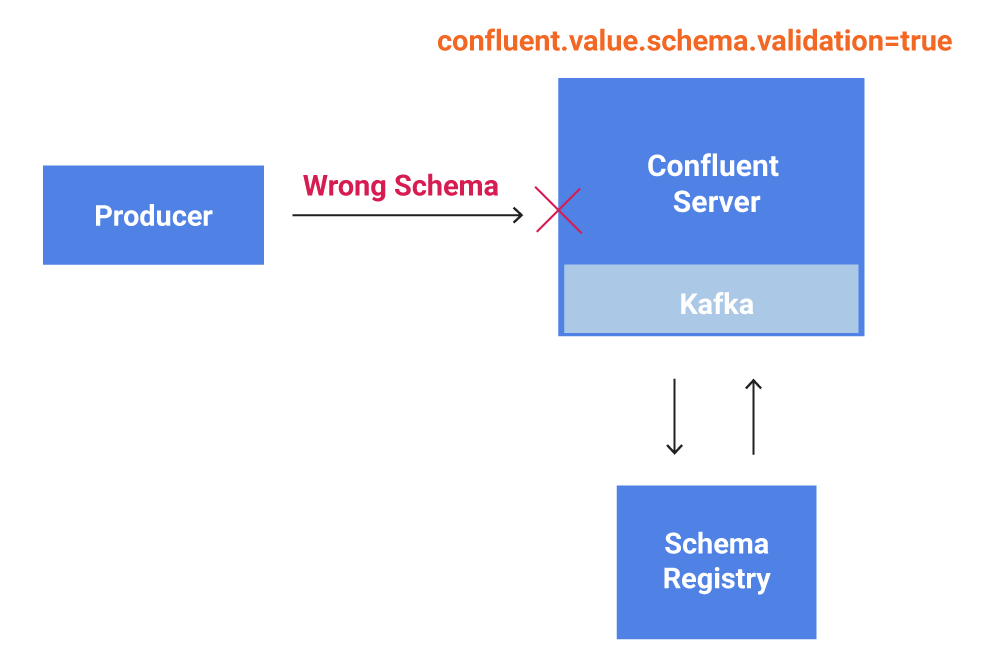

Schema Validation with Confluent Platform 5.4

Robust data governance support through Schema Validation on write is now supported in Confluent Platform 5.4. Schema Validation enables the broker to verify that data produced to an Apache Kafka® […]

Real-Time Analytics and Monitoring Dashboards with Apache Kafka and Rockset

In the early days, many companies simply used Apache Kafka® for data ingestion into Hadoop or another data lake. However, Apache Kafka is more than just messaging. The significant difference […]

Every Company is Becoming a Software Company

In 2011, Marc Andressen wrote an article called Why Software is Eating the World. The central idea is that any process that can be moved into software, will be. This […]

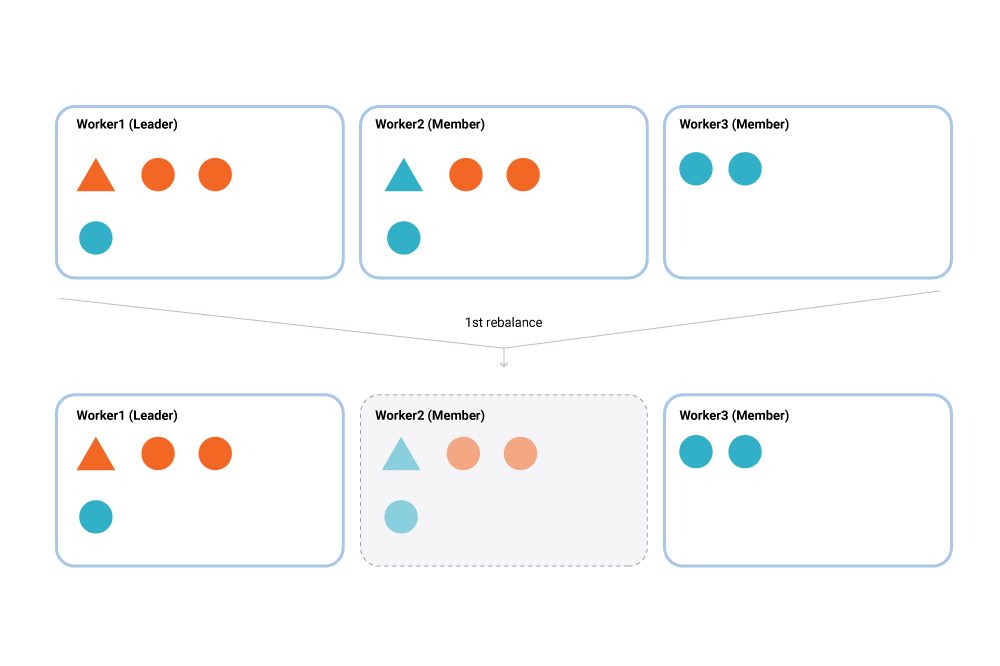

Incremental Cooperative Rebalancing in Apache Kafka: Why Stop the World When You Can Change It?

There is a coming and a going / A parting and often no—meeting again. —Franz Kafka, 1897 Load balancing and scheduling are at the heart of every distributed system, and […]

How to Make the Most of Kafka Summit San Francisco 2019

Kafka Summit San Francisco is just one week away. Conferences can be busy affairs, so here are some tips on getting the most out of your time there. Plan Go […]